Prof. Uri Weiser,Technion

- 1. Professor Uri Weiser Technion Haifa, Israel Handling Memory Accesses in Big Data Environment Chipex 2016 1The talk covers research done by: T. Horowitz , Prof. A. Kolodny, T. Morad, , Prof. A. Mendelson, Daniel Raskin, Gil Shomron, Loren Jamal, Prof. U. Weiser

- 2. 2 A New Architecture Avenues in Big Data Environment The Era of Heterogeneous HW/SW fits application Dynamic tuning Accelerators performance, energy efficiency Big Data = big In general non repeated access to all the “Big Data” What are the implications?

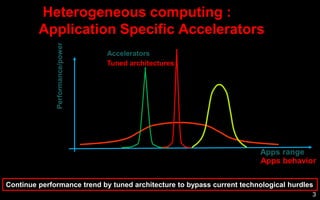

- 3. Heterogeneous computing : Application Specific Accelerators Performance/power Apps range Continue performance trend by tuned architecture to bypass current technological hurdles Performance/power Accelerators 3 Tuned architectures Apps behavior

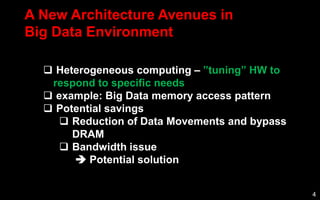

- 4. 4 A New Architecture Avenues in Big Data Environment Heterogeneous computing – ”tuning” HW to respond to specific needs example: Big Data memory access pattern Potential savings Reduction of Data Movements and bypass DRAM Bandwidth issue Potential solution

- 5. Input: Unstructured data Big Data usage of DATA 5 Read Once Non-Temporal Memory Access Funnel beta= BWout BWin

- 6. Structuring Input: Unstructured data Structured data (aggregation) A ML Model creation Data structuring = ETL C B C Model usage @ client 6 Machine Learning

- 7. 7 Does Big Data exhibit special memory access pattern? It probably should since Revisiting ALL Big Data items will cause huge/slow data transfers from Data sources There are 2 access modes of memory operations: Temporal Memory Access Non-Temporal Memory access Many Big Data computations exhibit a Non-Temporal Memory-Accesses and/or Funnel operation

- 8. Non-Temporal Memory access Initial analysis: Hadoop-grep Single Memory Access Pattern ~50% of Hadoop-grep unique memory references are single access 8

- 9. Non-Temporal Memory Accesses Preliminary Results WordCount: Access to Storage: Non-temporal locality Sort: Access to Storage: NO Non-temporal locality 0 10000 20000 30000 40000 50000 60000 70000 80000 0 10 20 30 40 50 Time [s] WordCount I/O Utilization 0 20000 40000 60000 80000 100000 120000 0 200 400 600 800 1000 1200 Time [s] SORT I/O Access rate [KB/s] Time Time 9 Access rate [KB/s]

- 10. 10 Where energy is wasted? • DRAM • Limited BW

- 11. From: Mark Horowitz, Stanford “Computing’s Energy Problems” From: Bill Dally (nVidia and Stanford), Efficiency and Parallelism, the challenges of future computing 11

- 12. Energy: DRAM 12

- 13. Memory Subsystem - copies L1$ L2$ LL Cache DRAM NV Storage RegistersKBs 10’s KBs MBs TBs GBs 10’s MBs 3GB/sec 25GB/sec 500GB/sec TB/sec Size Core BW - Source Copy 1 (main memory) Copy 2 (LL Cache) Copy 3 (L2 Cache) Copy 4 (L1 Cache) Copy 5 (Registers) - Destination 13

- 14. Memory Subsystem – DRAM bypass == DDIO L1$ L2$ LL Cache DRAM NV Storage Registers 3-20GB/sec 25GB/sec 500GB/sec TB/sec Core BW - Source Copy 1 (main memory) Copy 2 (LL Cache) Copy 3 (L2 Cache) Copy 4 (L1 Cache) Copy 5 (Registers) - Destination Potential savings: @ 0.5n J/B (DRAM) 10 – 20 GB/s NV BW 5W – 10W Reference: “Optimizing Read-Once Data Flow in Big-Data Applications” Morad, Ghomron, Erez, Weiser, Kolodny, in Computer Architecture Letters Journal 2016 14

- 15. Bandwidth When should we use Funnel at the Data source 15

- 16. Memory Hierarchy is Optimized for A: Bandwidth issue System are built for Temporal Locality 16 Highest Bandwidth L1$ L2$ LLC Cache DRAM NV Storage RegistersKBs 10’s KBs MBs TBs GBs 10’s MBs 3-20GB/sec 25GB/sec 500GB/sec TB/sec Size Core BW Existing BW NTMA Desired BW

- 17. # of cores Bandwidth [MB/s] # of cores CPU utilization [%] Bandwidth [MB/s] Read Once – Non-Temporal Memory Accesses # of cores Bandwidth [MB/s] CPU utilization [%] Temporal Memory Accesses # of cores Bandwidth [MB/s] Hint: Memory access per operation B: Memory access per operation impact BW CPU Utilizations 17

- 18. Solution: Flow of “Non-Temporal Data Accesses” Core L1$ L2$ LLC Cache DRAM NV Storage Registers The Funnel 18 Use Funnel when Bandwidth bottleneck occurs - “high” memory accesses per Instruction - Limited BW - Non temporal locality memory access *private communication with: Moinuddin Qureshi

- 19. “Funnel”ing “Read-Once” data in storage *Kang, Yangwook, Yang-suk Kee, Ethan L. Miller, and Chanik Park. "Enabling cost-effective data processing with smart ssd." In Mass Storage Systems and Technologies (MSST), 2013 IEEE 29th Symposium on, pp. 1-12. IEEE, 2013. **K. Eshghi and R. Micheloni. “SSD Architecture and PCI Express Interface” Typical SDD architecture* 19

- 20. Analytical model of the Funnel 20 Post process Bandwidth (BW) IN Bandwidth BW OUT Funnel B B = BWOUT/BWIN 20

- 21. Purposed Architecture 21 PCIe TL B CPU performs NTMA and TMA work SSD Storage B Funnel B=Bandwidth Baseline Configuration PCIe TL B 2,LcE CPU performs TMA workSSD performs NTMA work B Funnel Funnel Configurations B B B 21

- 22. Funnel Performance22 Performanceimprovement CPU becomes bottleneck CPU becomes bottleneck 𝟏 𝐏𝐂𝐈𝐞 𝐁𝐖 𝟏 𝐒𝐒𝐃 𝐁𝐖 PCIe TL B CPU performs NTMA and TMA work SSD Storage B Funnel B=Bandwidth PCIe TL B 2,LcE CPU performs: TMA work SSD performs NTMA work B Funnel beta Performance 22

- 23. Funnel energy Funnel improvement CPU becomes the bottleneck Funnel processor overhead PCIe TL B CPU performs NTMA and TMA work SSD Storage B Funnel B=Bandwidth PCIe TL B 2,LcE CPU performs TMA work SSD performs NTMA work B Funnel beta Energy CPU becomes the bottleneck 23

- 24. Solution: ? Non-Temporal Memory Accesses should be processed as close as possible to the data source Data that exhibit Temporal Locality should use current Memory Hierarchy Use Machine Learning (context aware*) to distinguish between the two phases Open questions: SW model Shared Data HW implementation Computational requirement at the “Funnel” *Reference: “Semantic locality and Context based prefetching” Peled, Mannor, Weiser, Etsion in ISCA 2015 24

- 25. Summary Memory access is a critical path in computing Funnel should be used for: Resolve BW systems’ bottleneck for specific applications Solve the System’s BW issues for “Read Once” cases Reduction of Data movement Free up system’s memory resources (re-Spark) Simple-energy-efficient engines at the front end Issues … 25

- 26. 26

![Non-Temporal Memory Accesses

Preliminary Results

WordCount:

Access to Storage:

Non-temporal locality

Sort:

Access to Storage:

NO Non-temporal locality

0

10000

20000

30000

40000

50000

60000

70000

80000

0 10 20 30 40 50

Time [s]

WordCount I/O Utilization

0

20000

40000

60000

80000

100000

120000

0 200 400 600 800 1000 1200

Time [s]

SORT I/O

Access rate

[KB/s]

Time

Time

9

Access rate

[KB/s]](https://image.slidesharecdn.com/prof-160529134516/85/Prof-Uri-Weiser-Technion-9-320.jpg)

![# of cores

Bandwidth

[MB/s]

# of cores

CPU

utilization

[%]

Bandwidth

[MB/s]

Read Once – Non-Temporal Memory Accesses

# of cores

Bandwidth

[MB/s]

CPU

utilization

[%]

Temporal Memory Accesses

# of cores

Bandwidth

[MB/s]

Hint: Memory access per operation

B: Memory access per operation impact BW

CPU Utilizations

17](https://image.slidesharecdn.com/prof-160529134516/85/Prof-Uri-Weiser-Technion-17-320.jpg)