Unit 5 Morphological Image Processing Advanced Topics in Digital Image Processing.pptx

- 1. Unit 5 Morphological Image Processing Advanced Topics in Digital Image Processing

- 2. 5.1 Basics of Mathematical Morphology 5.2 Dilation and Erosion Operations 5.3 Opening and Closing Operations 5.4 Applications of Morphological Operations 5.5 Multispectral and Hyperspectral Imaging 5.6 3D Image Processing 5.7 Image Registration and Fusion 5.8 Emerging Trends in Image Processing Technologies

- 3. 5.1 Basics of Mathematical Morphology Mathematical Morphology Mathematical Morphology is a tool for extracting image components that are useful for representation and description. The technique was originally developed by Matheron and Serra [3] at the Ecole des Mines in Paris. It is a set-theoretic method of image analysis providing a quantitative description of geometrical structures. (At the Ecole des Mines they were interested in analysing geological data and the structure of materials). Morphology can provide boundaries of objects, their skeletons, and their convex hulls. It is also useful for many pre- and post-processing techniques, especially in edge thinning and pruning. Generally speaking most morphological operations are based on simple expanding and shrinking operations. The primary application of morphology occurs in binary images, though it is also used on grey level images. It can also be useful on range images. (A range image is one where grey levels represent the distance from the sensor to the objects in the scene rather than the intensity of light reflected from them).

- 4. Set operations The two basic morphological set transformations are erosion and dilation These transformations involve the interaction between an image A (the object of interest) and a structuring set B, called the structuring element. Typically the structuring element B is a circular disc in the plane, but it can be any shape. The image and structuring element sets need not be restricted to sets in the 2D plane, but could be defined in 1, 2, 3 (or higher) dimensions. Let A and B be subsets of Z2. The translation of A by x is denoted Ax and is defined as The complement of A is denoted Ac , and the difference of two sets A and B is denoted A - B. The reflection of B, denoted , is defined as

- 5. 5.2 Dilation and Erosion Operations Dilation Dilation is a morphological operation that expands the boundaries of an object in an image. This is done by convolving the image with a structuring element, which determines the size and shape of the dilation. The output of the dilation operation is a new image where the pixels in the original image are expanded or dilated.

- 6. Erosion Operations Erosion is a morphological operation that shrinks the boundaries of an object in an image. This is done by convolving the image with a structuring element, which determines the size and shape of the erosion. The output of the erosion operation is a new image where the pixels in the original image are eroded or shrunk.

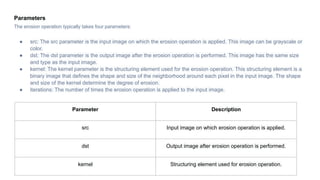

- 7. Parameters The erosion operation typically takes four parameters: ● src: The src parameter is the input image on which the erosion operation is applied. This image can be grayscale or color. ● dst: The dst parameter is the output image after the erosion operation is performed. This image has the same size and type as the input image. ● kernel: The kernel parameter is the structuring element used for the erosion operation. This structuring element is a binary image that defines the shape and size of the neighborhood around each pixel in the input image. The shape and size of the kernel determine the degree of erosion. ● iterations: The number of times the erosion operation is applied to the input image. Parameter Description src Input image on which erosion operation is applied. dst Output image after erosion operation is performed. kernel Structuring element used for erosion operation.

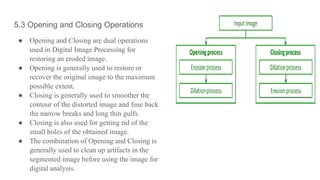

- 9. 5.3 Opening and Closing Operations ● Opening and Closing are dual operations used in Digital Image Processing for restoring an eroded image. ● Opening is generally used to restore or recover the original image to the maximum possible extent. ● Closing is generally used to smoother the contour of the distorted image and fuse back the narrow breaks and long thin gulfs. ● Closing is also used for getting rid of the small holes of the obtained image. ● The combination of Opening and Closing is generally used to clean up artifacts in the segmented image before using the image for digital analysis.

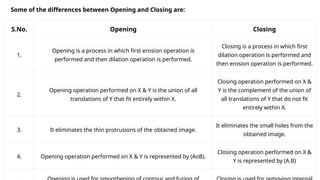

- 10. Some of the differences between Opening and Closing are: S.No. Opening Closing 1. Opening is a process in which first erosion operation is performed and then dilation operation is performed. Closing is a process in which first dilation operation is performed and then erosion operation is performed. 2. Opening operation performed on X & Y is the union of all translations of Y that fit entirely within X. Closing operation performed on X & Y is the complement of the union of all translations of Y that do not fit entirely within X. 3. It eliminates the thin protrusions of the obtained image. It eliminates the small holes from the obtained image. 4. Opening operation performed on X & Y is represented by (AoB). Closing operation performed on X & Y is represented by (A.B)

- 11. 5.4 Applications of Morphological Operations 1. Erosion Erosion is a fundamental morphological operation that reduces the size of objects in a binary image. It works by removing pixels from the boundaries of objects. ● Purpose: To remove small noise, detach connected objects, and erode boundaries. ● How it Works: The structuring element slides over the image, and for each position, if all the pixels under the structuring element match the foreground, the pixel in the output image is set to the foreground. Otherwise, it is set to the background. 2. Dilation Dilation is the opposite of erosion and is used to increase the size of objects in an image. ● Purpose: To join adjacent objects, fill small holes, and enhance features. ● How it Works: The structuring element slides over the image, and for each position, if any pixel under the structuring element matches the foreground, the pixel in the output image is set to the foreground.

- 12. 3. Opening Opening is a compound operation that involves erosion followed by dilation. ● Purpose: To remove small objects or noise from the image while preserving the shape and size of larger objects. ● How it Works: First, the image undergoes erosion, which removes small objects and noise. Then, dilation is applied to restore the size of the remaining objects to their original dimensions. 4. Closing Closing is another compound operation that consists of dilation followed by erosion. ● Purpose: To fill small holes and gaps in objects while preserving their overall shape. ● How it Works: First, dilation is applied to the image, filling small holes and gaps. Then, erosion is applied to restore the original size of the objects.

- 13. 5. Hit-or-Miss Transform The hit-or-miss transform is used to find specific patterns or shapes in a binary image. ● Purpose: To detect specific configurations or shapes in the image. ● How it Works: It uses a pair of structuring elements: one for the foreground and one for the background. The operation looks for places where the foreground structuring element matches the foreground in the image, and the background structuring element matches the background in the image. 6. Morphological Gradient The morphological gradient is the difference between the dilation and erosion of an image. ● Purpose: To highlight the boundaries or edges of objects in the image. ● How it Works: By subtracting the eroded image from the dilated image, the boundaries of the objects are emphasized.

- 14. 7. Top-Hat Transform The top-hat transform is used to extract small elements and details from an image. ● Purpose: To enhance bright objects on a dark background or vice versa. ● How it Works: There are two types: white top-hat (original image minus the result of opening) and black top-hat (result of closing minus the original image). 8. Skeletonization Skeletonization reduces objects in a binary image to their skeletal form. ● Purpose: To simplify objects to their essential structure while preserving their connectivity. ● How it Works: It iteratively erodes the image until the objects are reduced to a minimal, skeletal form.

- 15. 5.6 3D Image Processing 3D image processing is commonly used in medical imaging to analyze DICOM or NIfTI images from radiographic sources like MRI or CT scans. You can also use 3D image processing techniques in microscopy to detect and analyze tissue samples or trace neurons. Beyond medical imaging, you can use 3D image processing techniques to process security scans of baggage or to analyze scans of materials to understand their structure. Other application areas include video activity recognition for consumer electronics or aerial surveillance for defense systems.

- 16. There are many techniques you can use when processing 3D image data. These techniques vary based on the tasks you’re trying to accomplish – including importing, visualizing, processing, and analyzing your data.

- 17. 5.5 Multispectral and Hyperspectral Imaging Multispectral imaging refers to capturing images across a limited number of broad bands of the electromagnetic spectrum. These bands typically include visible light (e.g., red, green, and blue), as well as infrared wavelengths, but the number of bands is usually fewer than in hyperspectral imaging. Key Characteristics: ● Number of Bands: Multispectral sensors usually capture 3 to 15 bands of light. ● Wavelength Range: These bands can span visible (400-700 nm), near-infrared (700-1,100 nm), and sometimes shortwave infrared (1,100-2,500 nm). ● Resolution: The spatial resolution can vary, but it's often designed for large-area scans and moderate detail. Common Uses: ● Agriculture: To monitor plant health, detect drought stress, and analyze soil properties. ● Environmental Monitoring: Tracking deforestation, water bodies, and land cover changes. ● Remote Sensing: Used by satellites like Landsat or MODIS to monitor the Earth's surface. ● Military and Defense: For identifying targets or surveillance over large areas.

- 18. 2. Hyperspectral Imaging Hyperspectral imaging goes a step further than multispectral imaging by capturing a much larger number of narrower, continuous bands across a broader range of the electromagnetic spectrum. Key Characteristics: ● Number of Bands: Hyperspectral systems typically collect hundreds to thousands of narrow spectral bands (e.g., 100 to 200+). ● Wavelength Range: These bands can cover the visible, near-infrared (NIR), shortwave infrared (SWIR), and even the thermal infrared (TIR) ranges, allowing for a much more detailed spectrum of light. ● High Spectral Resolution: The narrower bands allow for more precise identification of materials and chemical properties. Common Uses: ● Mineral Mapping: Identifying specific minerals on the Earth’s surface based on their spectral signatures. ● Environmental and Ecological Studies: Detailed vegetation analysis, water quality monitoring, and habitat mapping. ● Food Quality Control: For detecting contaminants or assessing ripeness in agriculture. ● Medical Imaging: Hyperspectral cameras can be used to detect diseases, such as cancer, by analyzing tissue characteristics at the molecular level. ● Military and Defense: Used in surveillance, detecting hidden objects, and identifying specific materials or threats. Key Differences Between Multispectral and Hyperspectral Imaging: ● Spectral Resolution: Hyperspectral imaging provides much higher spectral resolution due to its narrower, continuous bands, while multispectral imaging uses fewer, broader bands. ● Data Volume: Hyperspectral images produce much larger datasets because they contain more spectral bands per pixel. This requires more advanced processing techniques and storage. ● Applications: Hyperspectral imaging is more suited for detailed material identification, while multispectral imaging is often used for broad analysis and monitoring over larger areas. Advantages of Multispectral and Hyperspectral Imaging: ● Enhanced Detection: Both imaging types allow for the detection of features that are not visible in traditional photography (e.g., plant stress or water quality). ● Non-destructive: These techniques allow for analysis without physically interacting with the object or area being studied. ● Detailed Analysis: Hyperspectral imaging, in particular, allows for the identification of specific materials and substances at a molecular level.

- 20. 5.7 Image Registration and Fusion 1. Image Registration Image registration is the process of aligning multiple images into a common coordinate system, allowing them to be compared, analyzed, or fused. The images may be of the same scene or object but captured at different times, from different angles, using different sensors, or under different conditions. The goal is to align the images so that corresponding points in the images match. Key Aspects: ● Types of Images: These could be from different sources such as multispectral, hyperspectral, or even different time frames or viewpoints. ● Transformation Models: Registration typically involves mathematical transformations to align the images, such as: ○ Rigid Transformation: Translations and rotations (no changes in scale or shape). ○ Affine Transformation: Includes scaling, rotation, translation, and shearing. ○ Non-rigid (Elastic) Transformation: More complex deformations, useful when images have nonlinear distortions. ● Reference Image: One image is usually designated as the reference, and the others are transformed to match it.

- 21. Steps in Image Registration: 1. Feature Detection: Identify distinctive points, edges, or regions in the images that can be matched. 2. Feature Matching: Establish correspondences between points in the images. 3. Transformation: Apply a geometric transformation (translation, rotation, scaling, etc.) to align the images. 4. Resampling: Interpolate pixel values in the transformed image to match the reference. 5. Evaluation: Measure the accuracy of the registration to ensure alignment quality (using metrics like mean square error, correlation, etc.). Applications: ● Medical Imaging: Combining MRI, CT scans, and X-rays for a better understanding of a patient's anatomy. ● Remote Sensing: Aligning images captured by different satellites or sensors (e.g., multispectral and panchromatic) for environmental monitoring. ● Computer Vision: Object recognition and tracking by aligning images taken from different viewpoints or times. ● Robotics: Aligning data from different sensors (such as cameras and LIDAR) for mapping and navigation.

- 22. 2. Image Fusion Image fusion is the process of combining multiple images (or datasets) into a single image, with the goal of providing more detailed, accurate, and useful information than any of the individual images alone. Fusion typically uses the information from different image sources, sensors, or modalities, and integrates them to form a comprehensive view of the scene or object. Key Aspects: ● Data Sources: Images might come from different sensors (e.g., multispectral, hyperspectral, thermal, radar, visible light) or from the same sensor but under different conditions. ● Resolution: Image fusion often involves combining high-resolution spatial information with low-resolution spectral information or vice versa. ● Fusion Goals: The aim is to combine complementary information, such as enhancing image details (spatial or spectral), improving contrast, or achieving more robust information for further analysis. Types of Image Fusion: 1. Pixel-Level Fusion: Combining pixel values from multiple images to form a new pixel value. This can enhance resolution, contrast, or detail. ○ Example: Combining a high-resolution panchromatic image with a low-resolution multispectral image. ○ Techniques: Principal Component Analysis (PCA), Wavelet Transform, or Intensity-Hue-Saturation (IHS) transform. 2. Feature-Level Fusion: Involves extracting features (e.g., edges, shapes) from different images and then combining them into a unified set of features. ○ Example: Combining edges detected in one image with texture information from another. 3. Decision-Level Fusion: Multiple processed images or data sets are fused based on decision-making algorithms or models (e.g., classifiers or decision trees). ○ Example: Combining the results of image classification from different sensors to make a final decision about land cover type.

- 23. 5.8 Emerging Trends in Image Processing Technologies 1. AI and Deep Learning in Image Processing Artificial Intelligence (AI) and Deep Learning (DL) have become the backbone of modern image processing, offering unprecedented capabilities in automated image analysis, feature extraction, and pattern recognition. ● Convolutional Neural Networks (CNNs): CNNs, a class of deep learning architectures, have revolutionized image recognition tasks, making them more accurate and efficient. CNNs are being used for everything from object detection and classification to facial recognition and medical imaging. ● Transfer Learning: This involves taking a pre-trained model (trained on large datasets) and fine- tuning it for a specific image processing task. It reduces the need for large labeled datasets and accelerates the adoption of deep learning models in specialized domains. ● Generative Adversarial Networks (GANs): GANs are gaining prominence for tasks like image generation, style transfer, and super-resolution. GANs are particularly useful in producing realistic synthetic images and enhancing low-resolution images (super-resolution). ● Self-supervised Learning: This emerging trend focuses on training models without the need for large labeled datasets by using the inherent structure of the data itself to generate labels. It’s being applied to tasks such as image segmentation and classification.

- 24. 2. Edge Computing and Real-time Processing With the increasing demand for real-time image processing, especially in areas like autonomous systems, surveillance, and robotics, edge computing is becoming a key trend. Instead of sending data to a remote server for processing, edge computing allows for real- time image analysis directly on devices (such as cameras or drones) near the data source. ● Edge AI: By deploying AI models on edge devices (like mobile phones, smart cameras, and drones), image data can be processed in real-time without the latency or bandwidth constraints of cloud-based processing. ● Optimized Hardware: Specialized hardware, such as Graphics Processing Units (GPUs), Field Programmable Gate Arrays (FPGAs), and Tensor Processing Units (TPUs), is being used to accelerate image processing tasks at the edge. 3. 3D Imaging and Computer Vision The demand for more realistic and immersive experiences is driving the growth of 3D imaging and computer vision techniques. 3D imaging can capture depth and spatial information, providing a richer set of data for processing. ● LiDAR (Light Detection and Ranging): LiDAR is increasingly used for 3D imaging, especially in autonomous vehicles, environmental mapping, and robotics. LiDAR sensors provide detailed depth maps and help with obstacle detection and mapping. ● Stereo Vision and Depth Estimation: Techniques like stereo vision (using two cameras to capture images from different angles) and depth estimation (using AI to infer depth from 2D images) are allowing machines to understand 3D structures from regular 2D cameras. ● 3D Reconstruction: This involves creating 3D models from 2D images, which has applications in fields such as gaming, virtual reality (VR), augmented reality (AR), and medical imaging.

- 25. 4. Image Super-Resolution Image super-resolution is an emerging trend that uses AI and deep learning to increase the resolution of images beyond their original pixel density, improving detail and clarity. ● Deep Learning-based Super-Resolution: Modern techniques leverage deep neural networks (such as convolutional autoencoders) to generate high-resolution images from low-resolution inputs. ● Single-image Super-Resolution (SISR): Using deep learning, SISR can generate high-resolution images from a single low-resolution image, improving detail while minimizing artifacts. ● Video Super-Resolution: Techniques that apply image super-resolution algorithms to video data to improve the quality of low-resolution video streams, often used in streaming services and security cameras. 5. Neuro-Inspired and Bio-Inspired Imaging Systems Inspired by the human brain and sensory systems, neuro-inspired and bio-inspired techniques are gaining attention in image processing. These systems mimic biological processes to achieve more efficient and accurate image recognition. ● Neuromorphic Computing: This involves building computing systems that model the brain's neural circuits. In image processing, neuromorphic systems can handle visual data in ways that are more energy-efficient and adaptive than traditional computing. ● Spiking Neural Networks (SNNs): These networks attempt to model the way neurons in the brain process visual data, offering more biologically plausible approaches for image processing and recognition.

![5.1 Basics of Mathematical Morphology

Mathematical Morphology

Mathematical Morphology is a tool for extracting image components that are useful for representation and description. The technique

was originally developed by Matheron and Serra [3] at the Ecole des Mines in Paris. It is a set-theoretic method of image analysis

providing a quantitative description of geometrical structures. (At the Ecole des Mines they were interested in analysing geological

data and the structure of materials). Morphology can provide boundaries of objects, their skeletons, and their convex hulls. It is also

useful for many pre- and post-processing techniques, especially in edge thinning and pruning.

Generally speaking most morphological operations are based on simple expanding and shrinking operations. The primary application

of morphology occurs in binary images, though it is also used on grey level images. It can also be useful on range images. (A range

image is one where grey levels represent the distance from the sensor to the objects in the scene rather than the intensity of light

reflected from them).](https://image.slidesharecdn.com/unit5morphologicalimageprocessingadvancedtopicsindigitalimageprocessing-241221110711-c93e2cce/85/Unit-5-Morphological-Image-Processing-Advanced-Topics-in-Digital-Image-Processing-pptx-3-320.jpg)