Version space - Concept Learning - Machine learning

- 1. Version space - Concept Learning

- 2. Concept Learning • We incrementally learn general concepts from specific training examples. • Each concept can be viewed as describing some subset of objects or events defined over a larger set. • Concept : A boolean-valued function defined over a larger set. – E.g.: A function defined over all engineering course, whose value is true for computer science and false for other courses.

- 3. Concept Learning • Concept learning is the task of automatically inferring the general definition of some concept, given examples labelled as members or non members of the concept. – Inferring a boolean-valued function from training examples of its input and output.

- 4. Concept Learning - Task • Example: Task of learning the target concept “Days on which my friend suzen enjoys his favorite water sport" Yes and No training examples for the target concept “EnjoySport” • The attribute EnjoySport indicates whether or not suzen enjoys his favorite water sport on this day. • The task is to learn to predict the value of EnjoySport for an arbitrary day, based on the values of its other attributes.

- 5. Hypothesis Representation • Hypothesis : h, a conjunction of constraints on the instance attributes. • Let each hypothesis be a vector of six constraints, specifying the values of the six attributes (Sky, AirTemp, Humidity, Wind, Water, and Forecast). • Each constraint can be – A specific value ( e.g., Water = Warm) – Don’t care ( e.g., Water = ? ) – No value allowed ( e.g., Water = "ɸ”) • Example: Sky, AirTemp, Humidity, Wind, Water, Forecast < Sunny , ? , High, ? , ? , ? >

- 6. Hypothesis Representation • If some instance x satisfies all the constraints of hypothesis h, then h classifies x as a positive example (h(x) = 1). • Example: The hypothesis that Suzen enjoys his favourite sport only on warm temperature with Strong wind (independent of the values of the other attributes) is represented by the expression < ?, Warm,?, Strong, ?, ? >

- 7. Hypothesis Representation • The most general hypothesis-that every day is a positive example - is represented by <?, ?, ?, ?, ?, ?> • The most specific possible hypothesis-that no day is a positive example-is represented by < ɸ, ɸ, ɸ, ɸ, ɸ, ɸ >

- 8. Hypothesis Representation • In general, any concept learning task can be described by – the set of instances over which the target function is defined – the target function – the set of candidate hypotheses considered by the learner, and – the set of available training examples

- 10. Concept Learning Task- EnjoySport Prototype

- 11. Concept Learning Task- EnjoySport Prototype • The set of items over which the concept is defined is called the set of instances, which we denote by X. • Example: X is the set of all possible days, each represented by the attributes Sky, AirTemp, Humidity, Wind, Water, and Forecast. • The concept or function to be learned is called the target concept, which we denote by c. – In general, c can be any boolean-valued function defined over the instances X; that is, c : X -> {0, 1} • Example: The target concept corresponds to the value of the attribute EnjoySport (i.e., c(x) = 1 if EnjoySport = Yes, and c(x) = 0 if EnjoySport = No)

- 12. Concept Learning Task- EnjoySport Prototype • When learning the target concept, the learner is presented a set of training examples, each consisting of an instance x from X, along with its target concept value c(x) • Instances for which c(x) = 1 are called positive examples, or members of the target concept. • Instances for which c(x) = 0 are called negative examples, or non members of the target concept. • Ordered pair <x, c(x)> describes the training example consisting of the instance x and its target concept value c(x). • D denotes the set of available training examples.

- 13. Concept Learning Task- EnjoySport Prototype • Given a set of training examples of the target concept c, the problem faced by the learner is to hypothesize, or estimate, c. • H denotes the set of all possible hypotheses that the learner may consider regarding the identity of the target concept. • In general, each hypothesis h in H represents a boolean- valued function defined over X; that is, h : X {0, 1}. • The goal of the learner is to find a hypothesis h such that h(x) = c(x) for all x in X.

- 14. The Inductive Learning Hypothesis • Learning Task :To determine a hypothesis h identical to the target concept c over the entire set of instances X – The only information available about c is its value over the training examples. • Inductive learning algorithms guarantee that the output hypothesis fits the target concept over the training data. • Assumption : The best hypothesis regarding unseen instances is the hypothesis that best fits the observed training data. • The inductive learning hypothesis: Any hypothesis found to approximate the target function well over a sufficiently large set of training examples will also approximate the target function well over other unobserved examples.

- 15. CONCEPT LEARNING AS SEARCH

- 16. CONCEPT LEARNING AS SEARCH • Concept Learning : Viewed as the task of searching through a large space of hypotheses implicitly defined by the hypothesis representation. • Goal of search: Find the hypothesis that best fits the training examples. – By selecting a hypothesis representation, the designer of the learning algorithm implicitly defines the space of all hypotheses that the program can ever represent and therefore can ever learn. • Example: Consider the instance X and hypotheses H in the EnjoySport learning task. – Given that the attribute Sky has three possible values, and that AirTemp, Humidity, Wind, Water, and Forecast each have two possible values, the instance space X contains exactly 3 .2. 2 .2 .2 .2 = 96 distinct instances. – Further, there are 5.4.4.4.4.4 = 5120 syntactically distinct hypotheses within H.

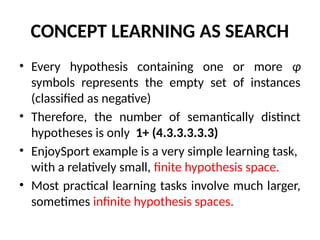

- 17. CONCEPT LEARNING AS SEARCH • Every hypothesis containing one or more ɸ symbols represents the empty set of instances (classified as negative) • Therefore, the number of semantically distinct hypotheses is only 1+ (4.3.3.3.3.3) • EnjoySport example is a very simple learning task, with a relatively small, finite hypothesis space. • Most practical learning tasks involve much larger, sometimes infinite hypothesis spaces.

- 18. CONCEPT LEARNING AS SEARCH

- 19. FIND-S: FINDING A MAXIMALLY specific HYPOTHESIS • Use the more_general_than partial ordering to organize the search for a hypothesis consistent with the observed training examples – Begin with the most specific possible hypothesis in H, then generalize this hypothesis each time it fails to cover an observed positive training example. – A hypothesis "covers" a positive example if it correctly classifies the example as positive • FIND-S algorithm 1. Initialize h to the most specific hypothesis in H 2. For each positive training instance x For each attribute constraint a, in h If the constraint a, is satisfied by x Then do nothing Else replace a, in h by the next more general constraint that is satisfied by x 3. Output hypothesis h

- 20. FIND-S: Example • Illustration: EnjoySport task – The first step of FIND-S is to initialize h to the most specific hypothesis in H h < ɸ, ɸ, ɸ, ɸ, ɸ, ɸ > – Observing the first training example in EnjoySport , which is a positive example, it is clear that the hypothesis is too specific. – None of the " ɸ " constraints in h are satisfied by this example, so each is replaced by the next more general constraint that fits the example; namely, the attribute values for this training example.

- 21. FIND-S: Example Hence, h (Sunny, Warm, Normal, Strong, Warm, Same) • This h is still very specific; it asserts that all instances are negative except for the single positive training example observed. • Next, the second training example (positive example) forces the algorithm to further generalize h, this time substituting a "?' in place of any attribute value in h that is not satisfied by the new example. • The refined hypothesis in this case is h (Sunny, Warm, ?, Strong, Warm, Same) • The third training example (a negative example) the algorithm makes no change to h. • FIND-S algorithm simply ignores every negative example, and hence no revision in h, is needed.

- 22. FIND-S: Example • The fourth (positive) example leads to a further generalization of h. h ( Sunny, Warm, ?, Strong, ?, ? ) • The search moves from hypothesis to hypothesis, searching from the most specific to progressively more general hypotheses.

- 23. FIND-S: Example Search in terms of the instance and hypothesis spaces: • At each step, the hypothesis is generalized only as far as necessary to cover the new positive example. • At each stage the hypothesis is the most specific hypothesis consistent with the training examples observed up to this point (hence the name FIND-S).

- 24. FIND-S: Example • The key property of the FIND-S algorithm is that for hypothesis spaces described by conjunctions of attribute constraints (such as H for the EnjoySport task), – FIND-S is guaranteed to output the maximally specific hypothesis within H that is consistent with the positive training examples. • Its final hypothesis will also be consistent with the negative examples provided the correct target concept is contained in H, and provided the training examples are correct.

- 25. Find-S Example