Vital AI: Big Data Modeling

- 1. Big Data Modeling Today: Marc C. Hadfield, Founder Vital AI http://vital.ai marc@vital.ai 917.463.4776

- 2. intro Marc C. Hadfield, Founder Vital AI http://vital.ai marc@vital.ai

- 3. Big Data Modeling is Data Modeling with the “Variety” Big Data Dimension in mind…

- 4. Big Data “Variety” Dimension The “Variety” problem can be addressed by a combination of improved tools and a methodology involving both system architecture and data science / analysis. Compared to Volume and Velocity, Variety is a very labor -intensive human-centric process. Variety is the many types of data to be utilized together in a data-driven application. Potentially too many types for any single person to keep track of (especially in Life Sciences).

- 5. Key Takeaways: Using OWL as a “meta-schema” can drastically reduce operations/development effort and increase the value of the data for analysis. OWL can augment and not replace familiar development processes and tools. A huge amount of ongoing development effort is spent transforming data across components and keeping data consistent during analysis. Collecting Good Data = Good Analytics

- 6. Big Data Modeling: Challenges Goals OWL as Modeling Language Using OWL-based Models… Collaboration/Modeling Tools

- 7. Examples from NYC Department of Education: Domain Ontology Application Architecture Development Methodology/Tools

- 8. NYC Department of Education:

- 14. Data Architecture Data Science Data Models in:

- 15. Challenges

- 16. Mobile/Web App Architecture Data Model Data Model Mobile App Server Implementation Database

- 17. Database Master Database "Data Lake" Database Database Database Business Intelligence Data Analytics Dashboard Enterprise DataWarehouse Architecture Schema “on read” or “on write” Data Model Data Model Data Model Data Model Data Model ETL Process

- 18. MobileApp Server Layer Real Time Data Calculated Views Hadoop Predictive Analytics Master Database "Data Lake" Business Intelligence Data Analytics Dashboard Lambda Architecture + Hadoop: Data Driven App Data Model Data Model Data Model Data ModelData Model

- 19. Data Wrangling / Data Science Master Database "Data Lake" Business Intelligence Data Analytics Raw Data R Data Model Data Model Data Model Prediction Models must integrate back with production environment:

- 20. Same Data, Difference Contexts… Redundant Models.

- 21. Data Architecture Issues { Database Schema JSON Data Data Object Classes Avro/Parquet Redundant Data Definitions: Considerable Development / Maintenance / Operational Overhead

- 22. Data Science / Data Wrangling Issues Data Harmonization: Merging Datasets from Multiple Sources Loss of Context: Feature f123 = Column135 X Column45 / Column13 Side note: Let’s stop using CSV files for datasets! No more flat datasets!

- 23. Goals

- 24. Goals: Reduce redundancy in Data Definitions Enforce Clean/Harmonized Data Use Contextual Datasets Use Best Software Components (Databases, Analytics, …) Use Familiar Tools (IDE, git, Languages, R)

- 25. OWL as Modeling Language

- 26. Web Ontology Language (OWL) Specifies an Ontology (“Data Model”) Formal Semantics, W3C Standard Provides a language to describe the meaning of data properties and how they relate to classes. Example: Mammal Necessary Conditions: warm-blooded, vertebrate animal, has hair or fur, secrets milk, (typically) live birth Greater descriptive power than Schema (SQL Tables) and Serialization Frameworks (Avro)

- 27. Why OWL? If we can more formally specify what the data *means*, then we can have a single data model (ontology) apply to our entire architecture, and data can be transformed automatically locally as per the needs of a specific software module. Manually coded data transforms may be “lossy” and/or introduce errors, so eliminating them helps keep data clean.

- 28. Why OWL? (continued) Example: if we specify what a “Document” is, then a text- mining analyzer will know how to access the textual data without further prompting. Example: if we specify Features for use in Machine Learning in the ontology, then features can be generated automatically to train Machine Learning Models, and the same features would be generated when we use the model in production.

- 29. Why OWL? (continued) Note: As ontologies can extend other ontologies, rather than a single ontology, a collection of linked ontologies can be used, allowing segmentation across an organization.

- 30. Vital Core Ontology Protege Editor… Nodes, Edges, HyperNodes, HyperEdges get URIs John/WorksFor/IBM —> Node / Edge / Node

- 31. Vital Core Ontology Vital Domain Ontology Application Domain Ontology Extending the Ontology

- 32. NYC Dept of Education Domain Ontology

- 33. Generating Data Bindings with VitalSigns: Ontology VitalSigns Groovy Bindings Semantic Bindings Hadoop Bindings Prolog Bindings Graph Bindings HBase Bindings JavaScript Bindings Code/Schema Generation vitalsigns generate -ont name…

- 34. person123.name = "John" person123.worksFor.company456 <person123> <hasName> "John" <worksFor123> <hasSource> <person123> <worksFor123> <hasDestination> <company456> <worksFor123> <hasType> <worksFor> person123, Node:type=Person, Node:hasName="John" worksFor123, Edge:type=worksFor, Edge:hasSource=person123, Edge:hasDestination=company456 Groovy RDF HBase Data Representations

- 35. VitalSigns Generation —> JAR Library Runtime Domain Ontology Domain Ontology Domain Ontology Domain Ontology VitalSigns Class

- 37. Developing with the Ontology in UI, Hadoop, NLP, Scripts, ... Node:Person Node:PersonEdge:hasFriend Set<Friend> person123.getFriends() Eclipse IDE

- 38. // Reference to an NYCSchool object NYCSchool school123 = … // get from database ! // Get a list of programs, local context (cache) List<NYCSchoolProgram> programs = school123.getPrograms() ! // Get list of programs, global context (database) List<NYCSchoolProgram> programs = school123.getPrograms(Context.ServiceWide) ! JVM Development

- 39. Using JSON-Schema Data in JavaScript for(var i = 0 ; i < progressReports.length; i++) { var r = progressReports[i]; var sub = $('<ul>'); sub.append('<li>Overall Grade : ' + r.progReportOverallGrade + '</li>'); sub.append('<li>Progress Grade: ' + r.progReportProgressGrade + '</li>'); sub.append('<li>Environment Grade: ' + r.progReportEnvironmentGrade + '</li>'); sub.append('<li>College and Career Readiness Grade: ' + r.progRepCollegeAndCareerReadinessGrade+ '</li>'); sub.append('<li>Performance Grade: ' + r.progReportPerformanceGrade+ '</li>'); sub.append('<li>Closing the Achievement Gap Points: ' + r.progReportClosingTheAchievementGapPoints+ '</li>'); sub.append('<li>Percentile Rank: ' + r.progReportPercentileRank + '</li>'); sub.append('<li>Overall Score: ' + r.progReportOverallScore + '</li>'); }

- 40. NoSQL Queries Query API / CRUD Operations ! Queries generated into “native” NoSQL Query format: Sparql / Triplestore (Allegrograph) HBase / DynamoDB MongoDB Hive/HiveQL (on Spark/Hadoop2.0) Query Types: “Select” and “Graph” Abstract type of datastore from application/analytics code Pass in a “native” query when necessary

- 41. Data Serialization, Analytics Jobs Data Serialized into file format by blocks of objects Leverage Hadoop Serialization Standards: Sequence File, Avro, Parquet Get data in and out of HDFS Files Spark/Hadoop jobs passed a set of objects as input URI of object is key Data Objects are serialized into Compressed Strings for transport over Flume, etc.

- 42. Machine Learning Via Hadoop, Spark, R Mahout, MLLib Build Predictive Models Classification, Clustering... Use Features defined in Ontology Learn Target defined in Ontology Models consume Ontology Data as input

- 43. Natural Language Processing/Text Mining Topic Categorization… Extract Entities… Text Features from Ontology Classes extending Document…

- 44. Graph Analytics GraphX, Giraph: PageRank, Centrality, Interest Graph, …

- 45. Inference / Rules Use Semantic Web Rule Engines / Reasoners ! Load Ontology + RDF Representation of Data Instances (Individuals)

- 46. R Analytics Load Serialized Data into R Dataframes ! Reference Classes and Properties by Name in Dataframes (cleaner code than huge number of columns)

- 47. Graph Visualization with Cytoscape Data already in Node/Edge Graph Form

- 48. Graph Visualization with Cytoscape

- 49. Visualize Data “Hot Spots”

- 50. NYC Schools Architecture Mobile App JSON Schema VertX Vital Flow Queue Rule Engine NLP DynamoDB Vital Prime VitalService Client NYC Schools Data Model R Serialized Data Data Insights

- 52. Collaboration/Tools git - code revision system OWL Ontologies treated as code artifact Coordinate across Teams: “Front End”, “Back End”, “Operations”, “Business Intelligence”, “Data Science”… Coordinate across Enterprise: Departments / Business Units “Data Model of Record”

- 53. Ontology Versioning NYCSchoolRecommendation-0.1.8.owl Semantic Versioning (http://semver.org/)

- 54. vitalsigns command line vitalsigns generate vitalsigns upversion/downversion code/schema generation increase version patch number move previous version to archive rename OWL file including username JAR files pushed into Maven (Continuous Integration)

- 55. Git Integration git: add, commit, push, pull diff: determine differences merge: merge two Ontologies detect types of Ontology changes merge into new patch version

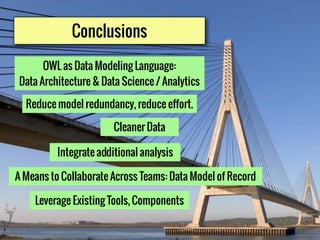

- 56. OWL as Data Modeling Language: Data Architecture & Data Science / Analytics Conclusions Leverage Existing Tools, Components Reduce model redundancy, reduce effort. A Means to Collaborate Across Teams: Data Model of Record Cleaner Data Integrate additional analysis

- 57. For more information, please contact: Marc C. Hadfield http://vital.ai marc@vital.ai 917.463.4776 Thank You!

![Using JSON-Schema Data in JavaScript

for(var i = 0 ; i < progressReports.length; i++) {

var r = progressReports[i];

var sub = $('<ul>');

sub.append('<li>Overall Grade : ' + r.progReportOverallGrade + '</li>');

sub.append('<li>Progress Grade: ' + r.progReportProgressGrade + '</li>');

sub.append('<li>Environment Grade: ' + r.progReportEnvironmentGrade + '</li>');

sub.append('<li>College and Career Readiness Grade: ' + r.progRepCollegeAndCareerReadinessGrade+ '</li>');

sub.append('<li>Performance Grade: ' + r.progReportPerformanceGrade+ '</li>');

sub.append('<li>Closing the Achievement Gap Points: ' + r.progReportClosingTheAchievementGapPoints+ '</li>');

sub.append('<li>Percentile Rank: ' + r.progReportPercentileRank + '</li>');

sub.append('<li>Overall Score: ' + r.progReportOverallScore + '</li>');

}](https://image.slidesharecdn.com/vitalaibigdatamodeling-140823030544-phpapp01/85/Vital-AI-Big-Data-Modeling-39-320.jpg)