Data preprocessing for Machine Learning with R and Python

- 2. Dataset Country Age Salary Purchased France 44 72000 No Spain 27 48000 Yes Germany 30 54000 No Spain 38 61000 No Germany 40 Yes France 35 58000 Yes Spain 52000 No France 48 79000 Yes Germany 50 83000 No France 37 67000 Yes

- 3. Python

- 4. File Reading from directory in python • from tkinter import * • from tkinter.filedialog import askopenfilename • root = Tk() • root.withdraw() • root.update() • file_path = askopenfilename() • root.destroy()

- 5. Importing the libraries • import numpy as np • import matplotlib.pyplot as plt • import pandas as pd

- 6. Importing the dataset • dataset = pd.read_csv('Data.csv') • X = dataset.iloc[:, :-1].values • y = dataset.iloc[:, 3].values

- 7. missing data • from sklearn.preprocessing import Imputer • imputer = Imputer(missing_values = 'NaN', strategy = 'mean', axis = 0) • imputer = imputer.fit(X[:, 1:3]) • X[:, 1:3] = imputer.transform(X[:, 1:3])

- 8. Encoding categorical data • from sklearn.preprocessing import LabelEncoder, OneHotEncoder • labelencoder_X = LabelEncoder() • X[:, 0] = labelencoder_X.fit_transform(X[:, 0]) • onehotencoder = OneHotEncoder(categorical_features = [0]) • X = onehotencoder.fit_transform(X).toarray()

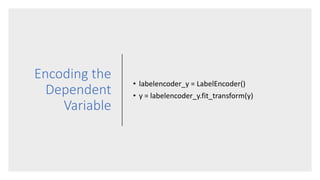

- 9. Encoding the Dependent Variable • labelencoder_y = LabelEncoder() • y = labelencoder_y.fit_transform(y)

- 10. Splitting into Training set and Test set • from sklearn.cross_validation import train_test_split • X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.2, random_state = 42)

- 11. Feature Scaling from sklearn.preprocessing import StandardScaler sc_X = StandardScaler() X_train = sc_X.fit_transform(X_train) X_test = sc_X.transform(X_test) NOTE : Apply feature scaling after splitting the data and it is because the following • Split it, then scale. Imagine it this way: you have no idea what real-world data looks like, so you couldn't scale the training data to it. Your test data is the surrogate for real- world data, so you should treat it the same way. • To reiterate: Split, scale your training data, then use the scaling from your training data on the testing data.

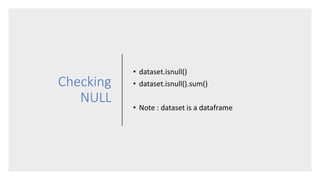

- 12. Checking NULL • dataset.isnull() • dataset.isnull().sum() • Note : dataset is a dataframe

- 13. # Data Preprocessing Python # Importing the libraries import numpy as np import matplotlib.pyplot as plt import pandas as pd # Importing the dataset dataset = pd.read_csv('Data.csv') X = dataset.iloc[:, :-1].values y = dataset.iloc[:, 3].values # Taking care of missing data from sklearn.preprocessing import Imputer imputer = Imputer(missing_values = 'NaN', strategy = 'mean', axis = 0) imputer = imputer.fit(X[:, 1:3]) X[:, 1:3] = imputer.transform(X[:, 1:3]) # Splitting the dataset into the Training set and Test set from sklearn.cross_validation import train_test_split X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.2, random_state = 0) # Feature Scaling from sklearn.preprocessing import StandardScaler sc_X = StandardScaler() X_train = sc_X.fit_transform(X_train) X_test = sc_X.transform(X_test) sc_y = StandardScaler() y_train = sc_y.fit_transform(y_train)

- 14. R

- 15. R : Importing the dataset dataset = read.csv('Data.csv')

- 16. R : missing data • dataset$Age = ifelse(is.na(dataset$Age), ave(dataset$Age, FUN = function(x) mean(x, na.rm = TRUE)), dataset$Age) • dataset$Salary = ifelse(is.na(dataset$Salary), ave(dataset$Salary, FUN = function(x) mean(x, na.rm = TRUE)), dataset$Salary)

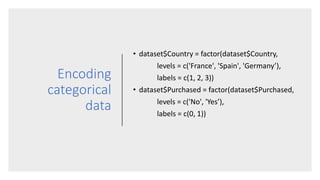

- 17. Encoding categorical data • dataset$Country = factor(dataset$Country, levels = c('France', 'Spain', 'Germany’), labels = c(1, 2, 3)) • dataset$Purchased = factor(dataset$Purchased, levels = c('No', 'Yes’), labels = c(0, 1))

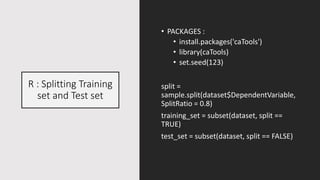

- 18. R : Splitting Training set and Test set • PACKAGES : • install.packages('caTools') • library(caTools) • set.seed(123) split = sample.split(dataset$DependentVariable, SplitRatio = 0.8) training_set = subset(dataset, split == TRUE) test_set = subset(dataset, split == FALSE)

- 19. R: Feature Scaling training_set = scale(training_set) test_set = scale(test_set) NOTE : we cant apply the feature scaling to categorical data in R like python. Here we have to apply feature selection to only non categorical features. So our code becomes : training_set[, 2:3] = scale(training_set [, 2:3]) test_set = scale(test_set [, 2:3])

- 20. # Data Preprocessing R # Importing the dataset dataset = read.csv('Data.csv') # Taking care of missing data dataset$Age = ifelse(is.na(dataset$Age), ave(dataset$Age, FUN = function(x) mean(x, na.rm = TRUE)), dataset$Age) dataset$Salary = ifelse(is.na(dataset$Salary), ave(dataset$Salary, FUN = function(x) mean(x, na.rm = TRUE)), dataset$Salary) # Splitting the dataset into the Training set and Test set # install.packages('caTools') library(caTools) set.seed(123) split = sample.split(dataset$DependentVariable, SplitRatio = 0.8) training_set = subset(dataset, split == TRUE) test_set = subset(dataset, split == FALSE) # Feature Scaling training_set = scale(training_set) test_set = scale(test_set)

![Importing the

dataset

• dataset = pd.read_csv('Data.csv')

• X = dataset.iloc[:, :-1].values

• y = dataset.iloc[:, 3].values](https://image.slidesharecdn.com/datapreprocessing-171226232009/85/Data-preprocessing-for-Machine-Learning-with-R-and-Python-6-320.jpg)

![missing data

• from sklearn.preprocessing import Imputer

• imputer = Imputer(missing_values = 'NaN',

strategy = 'mean', axis = 0)

• imputer = imputer.fit(X[:, 1:3])

• X[:, 1:3] = imputer.transform(X[:, 1:3])](https://image.slidesharecdn.com/datapreprocessing-171226232009/85/Data-preprocessing-for-Machine-Learning-with-R-and-Python-7-320.jpg)

![Encoding

categorical

data

• from sklearn.preprocessing import

LabelEncoder, OneHotEncoder

• labelencoder_X = LabelEncoder()

• X[:, 0] = labelencoder_X.fit_transform(X[:, 0])

• onehotencoder =

OneHotEncoder(categorical_features = [0])

• X = onehotencoder.fit_transform(X).toarray()](https://image.slidesharecdn.com/datapreprocessing-171226232009/85/Data-preprocessing-for-Machine-Learning-with-R-and-Python-8-320.jpg)

![# Data Preprocessing Python

# Importing the libraries

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

# Importing the dataset

dataset = pd.read_csv('Data.csv')

X = dataset.iloc[:, :-1].values

y = dataset.iloc[:, 3].values

# Taking care of missing data

from sklearn.preprocessing import Imputer

imputer = Imputer(missing_values = 'NaN', strategy = 'mean', axis = 0)

imputer = imputer.fit(X[:, 1:3])

X[:, 1:3] = imputer.transform(X[:, 1:3])

# Splitting the dataset into the Training set and Test set

from sklearn.cross_validation import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.2, random_state = 0)

# Feature Scaling

from sklearn.preprocessing import StandardScaler

sc_X = StandardScaler()

X_train = sc_X.fit_transform(X_train)

X_test = sc_X.transform(X_test)

sc_y = StandardScaler()

y_train = sc_y.fit_transform(y_train)](https://image.slidesharecdn.com/datapreprocessing-171226232009/85/Data-preprocessing-for-Machine-Learning-with-R-and-Python-13-320.jpg)

![R: Feature Scaling

training_set = scale(training_set)

test_set = scale(test_set)

NOTE : we cant apply the feature scaling to

categorical data in R like python. Here we

have to apply feature selection to only non

categorical features. So our code becomes :

training_set[, 2:3] = scale(training_set [, 2:3])

test_set = scale(test_set [, 2:3])](https://image.slidesharecdn.com/datapreprocessing-171226232009/85/Data-preprocessing-for-Machine-Learning-with-R-and-Python-19-320.jpg)