Kernel Bayes Rule

- 1. 1 Kernel Bayes’ Rule Yan Xu yxu15@uh.edu Kernel based automatic learning workshop University of Houston April 24, 2014 K. Fukumizu, L. Song, A. Gretton, “Kernel Bayes’ rule: Bayesian inference with positive definite kernels” Journal of Machine Learning Research, vol. 14, Dec. 2013.

- 2. Bayesian inference Bayes’ rule • PROS – Principled and flexible method for statistical inference. – Can incorporate prior knowledge. • CONS – Computation: integral is needed » Numerical integration: Monte Carlo etc » Approximation: Variational Bayes, belief propagation etc. 2 𝑞 𝑥 𝑦 = 𝑝 𝑦 𝑥 𝜋(𝑥) 𝑝 𝑦 𝑥 𝜋 𝑥 𝑑𝑥posterior likelihood prior

- 3. Motivating Example: Robot location COLD: Cosy Location Database Kanagawa et al. Kernel Monte Carlo Filter, 2013 State 𝑋𝑡 ∈ 𝐑3 : 2-D coordinate and orientation of a robot Observation 𝑍𝑡: image SIFT features (Scale Invariant Feature Transform, 4200dim) Goal: Estimate the location of a robot from image sequences

- 4. – Hidden Markov Model Sequential application of Bayes’ rule solves the task. – Nonparametric approach is needed: Observation process: 𝑝 𝑍𝑡 𝑋𝑡) is very difficult to model with a simple parametric model. “Nonparametric” implementation of Bayesian inference 4 X1 X2 X3 XT Z1 Z2 Z3 ZT … Transition of state Location & orientation image location & orientation image of the environment 4 location & orientation image of the environment 𝑝 𝑍𝑡 𝑋𝑡) 𝑝 𝑋𝑡 𝑍1:𝑡)

- 5. Kernel method for Bayesian inference A new nonparametric / kernel approach to Bayesian inference • Using positive definite kernels to represent probabilities. – Kernel mean embedding is used. • “Nonparametric” Bayesian inference – No density functions are needed, but data are needed. • Bayesian inference with matrix computation. – Computation is done with Gram matrices. – No integral, no approximate inference. 5

- 6. Kernel methods: an overview 6 Feature space (functional space) xi F H W xj Space of original data feature map Do linear analysis in the feature space. Φ: Ω → 𝐻, 𝑥 ↦ Φ(𝑥) Kernel PCA, kernel SVM, kernel regression etc. Φ 𝑥𝑖 Φ 𝑥𝑗

- 7. Positive semi-definite kernel 7 Def. W: set; k : W x W R k is positive semi-definite if k is symmetric, and for any the matrix (Gram matrix) satisfies – Examples on Rm: • Gaussian kernel • Laplace kernel • Polynomial kernel 𝑐 = [𝑐1, … , 𝑐 𝑛] 𝑇∈ 𝑅 𝑛, 𝑛 ∈ 𝐍, 𝑥1, … , 𝑥 𝑛 ∈ W, 𝐺 𝑋: 𝑘 𝑋𝑖, 𝑋𝑗 𝑖𝑗 𝑐 𝑇 𝐺 𝑋 𝑐 = 𝑐𝑖 𝑐𝑗 𝑘 𝑋𝑖, 𝑋𝑗 𝑛 𝑖,𝑗=1 ≥ 0. 𝑘 𝐺 𝑥, 𝑦 = exp − 1 2𝜎2 ||𝑥 − 𝑦||2 𝑘 𝐿 𝑥, 𝑦 = exp −𝛼 |𝑥𝑖 − 𝑦𝑖| 𝑚 𝑖=1 𝑘 𝑃 𝑥, 𝑦 = 𝑥 𝑇 𝑦 + 𝑐 𝑑 (𝑐 ≥ 0, 𝑑 ∈ 𝐍) (𝛼 > 0) (𝜎 > 0) 𝑘 𝑋𝑖, 𝑋𝑗 =<Φ 𝑋𝑖 , Φ 𝑋𝑗 > positive definite: 𝑐 𝑇 𝐺 𝑋 𝑐 > 0.

- 8. Reproducing Kernel Hilbert Space 8 “Feature space” = Reproducing kernel Hilbert space (RKHS) A positive definite kernel 𝑘 on W uniquely defines a RKHS Hk (Aronzajn 1950). • Function space: functions on W. • Very special inner product: for any 𝑓 ∈ 𝐻 𝑘 • Its dimensionality may be infinite (Gaussian, Laplace). (reproducing property)𝑓, 𝑘 ∙ , 𝑥 𝐻 𝑘 = 𝑓(𝑥)

- 9. Mapping data into RKHS 9 Φ: Ω → 𝐻 𝑘, 𝑥 ↦ 𝑘(⋅, 𝑥) 𝑋1, … , 𝑋 𝑛 ↦ Φ 𝑋1 , … , Φ(𝑋 𝑛): functional data Basic statistics on Euclidean space Basic statistics on RKHS Probability Covariance Conditional probability Kernel mean Covariance operator Conditional kernel mean

- 10. Mean on RKHS 10 X: random variable taking value on a measurable space W, ~ P. k: pos.def. kernel on W. : RKHS defined by k. Def. kernel mean on H : – Kernel mean can express higher-order moments of 𝑋. Suppose 𝑘 𝑢, 𝑥 = 𝑐0 + 𝑐1 𝑢𝑥 + 𝑐2 𝑢𝑥 2 + ⋯ 𝑐𝑖 ≥ 0 , e.g., 𝑒 𝑢𝑥 – Reproducing expectations 𝑓, 𝑚 𝑃 = 𝐸 𝑓 𝑋 for any 𝑓 ∈ 𝐻 𝑘. 𝑚 𝑃 ≔ 𝐸 Φ 𝑋 = 𝐸 𝑘 ⋅ , 𝑋 = 𝑘 ⋅, 𝑥 𝑑𝑃 𝑥 ∈ 𝐻 𝑘 𝑚 𝑃 𝑢 = 𝑐0 + 𝑐1 𝐸 𝑋 𝑢 + 𝑐2 𝐸 𝑋2 𝑢2 + ⋯ 𝐻 𝑘

- 11. Characteristic kernel (Fukumizu et al. JMLR 2004, AoS 2009; Sriperumbudur et al. JMLR2010) 11 Def. A bounded pos. def. kernel k is called characteristic if is injective, i.e., 𝐸 𝑋~𝑃 𝑘 ⋅ , 𝑋 = 𝐸 𝑌~𝑄 𝑘 ⋅ , 𝑌 𝑃 = 𝑄. 𝑚 𝑃 with a characteristic kernel uniquely determines a probability. Examples: Gaussian, Laplace kernel Polynomial kernel: not characteristic. P → 𝐻 𝑘, 𝑃 ↦ 𝑚 𝑃

- 12. Covariance 12 (X , Y) : random vector taking values on WX×WY. (HX, kX), (HY , kY): RKHS on WX and WY, resp. Def. (uncentered) covariance operators 𝐶 𝑌𝑋: 𝐻 𝑋 → 𝐻 𝑌, 𝐶 𝑋𝑋: 𝐻 𝑋 → 𝐻 𝑋 Reproducing property 𝐶 𝑌𝑋: = 𝐸 Φ 𝑌 𝑌 Φ 𝑋 𝑋 ,⋅ 𝐻 𝑋 , 𝐶 𝑋𝑋 = 𝐸 Φ 𝑋 𝑋 Φ 𝑋 𝑋 ,⋅ 𝐻 𝑋 𝑔, 𝐶 𝑌𝑋 𝑓 𝐻 𝑌 = 𝐸 𝑓 𝑋 𝑔 𝑌 for all 𝑓 ∈ 𝐻 𝑋, 𝑔 ∈ 𝐻 𝑌. WX WY FX FY HX HY X Y FX(X) FY(Y) YXC 𝐶 𝑌𝑋 𝑓 = 𝑘 𝑌 ⋅, 𝑦 𝑓 𝑥 𝑑𝑃 𝑥, 𝑦 , 𝐶 𝑋𝑋 𝑓 = 𝑘 𝑋 ⋅, 𝑥 𝑓 𝑥 𝑑𝑃𝑋(𝑥) 𝐶 𝑌𝑋 𝑓 = 1 𝑛 𝑘 𝑌 ⋅, 𝑌𝑖 𝑘 𝑋 ⋅, 𝑋𝑖 , 𝑓 𝑛 𝑖=1 = 1 𝑛 𝑘 𝑌 ⋅, 𝑌𝑖 𝑓(𝑋𝑖) 𝑛 𝑖=1 Empirical Estimator: Given 𝑋1, 𝑌1, , … , 𝑋 𝑛, 𝑌𝑛 ~ 𝑃, i.i.d.,

- 13. Conditional kernel mean 13 – 𝑋, 𝑌: Centered gaussian random vectors (∈ 𝑅 𝑚, 𝑅ℓ, resp.) – With characteristic kernels, for general 𝑋 and 𝑌, argmin 𝐴∈𝑅ℓ×𝑚 𝑌 − 𝐴𝑋 2 𝑑𝑃(𝑋, 𝑌) = 𝑉𝑌𝑋 𝑉𝑋𝑋 −1 argmin 𝐹∈𝐻 𝑋⊗𝐻 𝑌 Φ 𝑌 𝑌 − 𝐹 𝑋 𝐻 𝑌 2 𝑑𝑃(𝑋, 𝑌) = 𝐶 𝑌𝑋 𝐶 𝑋𝑋 −1 〈𝐹, Φ 𝑋 𝑋 〉 𝐸 Φ 𝑌 𝑋 = 𝑥 = 𝐶 𝑌𝑋 𝐶 𝑋𝑋 −1 Φ 𝑋(𝑥) 𝑉 : Covariance matrix In practice: 𝑚 𝑌|𝑋=𝑥 ≔ 𝐶 𝑌𝑋 𝐶 𝑋𝑋 + 𝜀 𝑛 𝐼 −1 Φ 𝑋(𝑥) 𝐸 𝑌 𝑋 = 𝑥 = ?𝑉𝑌𝑋 𝑉𝑋𝑋 −1 𝑥

- 14. Kernel realization of Bayes’ rule 14 Bayes’ rule Π: prior with p. d. f 𝜋 𝑝(𝑦|𝑥): conditional probability (likelihood). Kernel realization: Goal: estimate the kernel mean of the posterior given – 𝑚Π: kernel mean of prior Π, – 𝐶 𝑋𝑋, 𝐶 𝑌𝑋: covariance operators for (𝑋, 𝑌) ~ 𝑄, 𝑞 𝑥 𝑦 = 𝑝 𝑦 𝑥 𝜋(𝑥) 𝑞(𝑦) , 𝑞 𝑦 = 𝑝 𝑦 𝑥 𝜋 𝑥 𝑑𝑥. 𝑚 𝑄 𝑥|𝑦∗ : = 𝑘 𝑋(⋅, 𝑥)𝑞 𝑥 𝑦∗ 𝑑𝑥

- 15. 15 𝑋𝑗, 𝑌𝑗 X Y Observation 𝑦∗ 𝑋𝑖, 𝑤𝑖 X Kernel realization of Bayes’ rule 𝑈𝑖, 𝛾𝑖 X Prior 𝑚Π = 𝛾𝑗Φ 𝑋 𝑈𝑗 ℓ 𝑗=1 𝑈1, 𝛾1 , … , 𝑈ℓ, 𝛾ℓ : weighted sample expression from importance sampling Posterior 𝑚 𝑄 𝑥|𝑦∗ = 𝑤𝑖 𝑦∗ Φ 𝑋(𝑋𝑖) 𝑛 𝑖=1 𝑋1, 𝑌1 , … , 𝑋 𝑛, 𝑌𝑛 : (joint) sample ~ 𝑄

- 16. 𝑚 𝑄 𝑥|𝑦∗ ⋅ = 𝑤𝑖 𝑦∗ 𝑘 𝑋 ⋅, 𝑋𝑖 = 𝐤 𝑋 ⋅ 𝑇 𝑅 𝑥|𝑦 𝐤 𝑌 𝑦∗ 𝑛 𝑖=1 Kernel Bayes’ Rule 16 Input: 𝑋1, 𝑌1 , … , 𝑋 𝑛, 𝑌𝑛 ~ Q, 𝑚Π = 𝛾𝑗k 𝑋 𝑋𝑖, 𝑈𝑗 ℓ 𝑗=1 𝑖=1 (prior) n < 𝑓 , 𝑚 𝑄 𝑥|𝑦∗ > = 𝐟 𝑋 𝑇 𝑅 𝑥|𝑦 𝐤 𝑌 𝑦∗ , 𝐟 𝑋 = 𝑓 𝑋1 , … , 𝑓 𝑋 𝑛 𝑇𝑓 ∈ 𝐻 𝑋 𝐤 𝑌 𝑦∗ = 𝐤 𝑌 𝑌𝑖, 𝑦∗ 𝑖=1 n 𝜀 𝑛, 𝛿 𝑛: regularization coefficients Note: y∗ : observation 𝐺 𝑋: 𝑘 𝑋 𝑋𝑖, 𝑋𝑗 𝑖𝑗 𝐺 𝑋𝑈: 𝑘 𝑋 𝑋𝑖, 𝑈𝑗 𝑖𝑗 𝐺 𝑌: 𝑘 𝑌 𝑌𝑖, 𝑌𝑗 𝑖𝑗 Λ = Diag 𝐺 𝑋/𝑛 + 𝜀 𝑛 𝐼 𝑛 −1 𝐺 𝑋𝑈 𝛾 n × n n× ℓ ℓ × 1n × n 𝑅 𝑥|𝑦 = Λ𝐺 𝑌 Λ𝐺 𝑌 2 + 𝛿 𝑛 𝐼 𝑛 −1 Λ. n × n n × n

- 17. Application: Bayesian Computation Without Likelihood 17 KBR for kernel posterior mean: ABC (Approximate Bayesian Computation): 1). Generate a sample 𝑋𝑡 from the prior Π; 2). Generate a sample 𝑌𝑡 from 𝑃(𝑌|𝑋𝑡); 3). If 𝐷(𝑦∗, 𝑌𝑡) < 𝜏, accept 𝑋𝑡; otherwise reject; 4) Go to 1). 1). Generate samples 𝑋1, … , 𝑋 𝑛 from the prior Π; 2). Generate a sample 𝑌𝑡 from 𝑃(𝑌|𝑋𝑡); 3). Compute Gram matrices 𝐺 𝑋 and 𝐺 𝑌 with (𝑋1, 𝑌1),…,(𝑋 𝑛, 𝑌𝑛); 4). 𝑅 𝑥|𝑦 = Λ𝐺 𝑌 Λ𝐺 𝑌 2 + 𝛿 𝑛 𝐼 𝑛 −1 Λ. 𝑚 𝑄 𝑥|𝑦∗ ⋅ = 𝐤 𝑋 ⋅ 𝑇 𝑅 𝑥|𝑦 𝐤 𝑌 𝑦∗ Efficiency can be arbitrarily poor for small 𝜏. Only obtain expectations of functions in RKHS Note: D is a distance measure in the space of Y.

- 18. 18 Application: Kernel Monte Carlo Filter X1 X2 X3 XT Z1 Z2 Z3 ZT … Transition of state 𝑝(𝑋, 𝑍) = 𝜋(𝑋1) 𝑝(𝑍𝑡|𝑋𝑡) 𝑇 𝑡=1 𝑞(𝑋𝑡+1|𝑋𝑡) 𝑇−1 𝑡=1 Problem statement Training data: (𝑋1, 𝑍1, … , 𝑋 𝑇, 𝑍 𝑇) Kernel mean of posterior: 𝑚 𝑥 𝑡|𝑧1:𝑡 = 𝑘 𝑥 ∙, 𝑋𝑖 𝑝 𝑥𝑡 𝑧1:𝑡 𝑑𝑥𝑡 = 𝛼 𝑡 𝑘 𝑋(⋅, 𝑋𝑖)𝑛 𝑖=1 𝑖 State estimation: pre-image: or the sample point with maximum weight

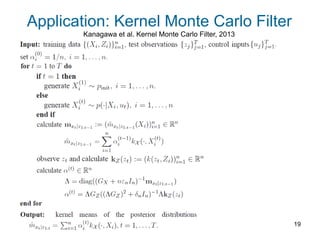

- 19. 19 Kanagawa et al. Kernel Monte Carlo Filter, 2013 Application: Kernel Monte Carlo Filter

- 20. 20 NAI: naïve method KBR: KBR + KBR NN: PF + K-nearest neighbor KMC: Kernel Monte Carlo KMC for Robot localizationKanagawa et al. Kernel Monte Carlo Filter, 2013 training sample = 200 : true location : estimate

- 21. Conclusions 21 A new nonparametric / kernel approach to Bayesian inference • Kernel mean embedding: using positive definite kernels to represent probabilities • “Nonparametric” Bayesian inference : No densities are needed but data. • Bayesian inference with matrix computation. Computation is done with Gram matrices. No integral, no approximate inference. • More suitable for high dimensional data than smoothing kernel approach.

- 22. References Fukumizu, K., L. Song, A. Gretton (2013) Kernel Bayes' Rule: Bayesian Inference with Positive Definite Kernels. Journal of Machine Learning Research. 14:3753−3783. Song, L., Gretton, A., and Fukumizu, K. (2013) Kernel Embeddings of Conditional Distributions. IEEE Signal Processing Magazine 30(4), 98- 111 Kanagawa, M., Nishiyama, Y., Gretton, A., Fukumizu. K. (2013) Kernel Monte Carlo Filter. arXiv:1312.4664 22

- 23. Appendix I. Importance sampling 23

- 24. Appendix II. Simulated Gaussian data • Simulated data: (𝑋𝑖, 𝑌𝑖)~𝑁( 0 𝑑/2, 𝟏 𝑑/2 𝑇 , 𝑉), 𝑖 = 1, … , 𝑁 𝑉~𝐴 𝑇 𝐴 + 2𝐼 𝑑, 𝐴~𝑁 0, 𝐼 𝑑 , 𝑁 = 200 • Prior Π: 𝑈𝑗~𝑁 0; 0.5 ∗ 𝑉𝑋𝑋 , 𝑗 = 1, … , 𝐿, 𝐿 = 200 • Dimension: 𝑑 = 2, … , 64 • Gaussian kernels are used for both methods • Bandwidth parameters are selected with CV or the median of the pair-wise distances 24 Validation: Mean square errors (MSE) of the estimates of 𝑥𝑞 𝑥 𝑦 𝑑𝑥 over 1000 random points 𝑦~𝑁(0, 𝑉𝑌𝑌). ℎ 𝑋 = ℎ 𝑌

- 25. 25 KBR: Kernel Bayes Rule KDE+IW: Kernel density estimation + Importance weighting. COND: belonging to KBR ABC: Approximate Bayesian Computation Numbers at marks are sample sizes

![Positive semi-definite kernel

7

Def. W: set; k : W x W R

k is positive semi-definite if k is symmetric, and for any

the matrix (Gram matrix) satisfies

– Examples on Rm:

• Gaussian kernel

• Laplace kernel

• Polynomial kernel

𝑐 = [𝑐1, … , 𝑐 𝑛] 𝑇∈ 𝑅 𝑛,

𝑛 ∈ 𝐍, 𝑥1, … , 𝑥 𝑛 ∈ W,

𝐺 𝑋: 𝑘 𝑋𝑖, 𝑋𝑗

𝑖𝑗

𝑐 𝑇

𝐺 𝑋 𝑐 = 𝑐𝑖 𝑐𝑗 𝑘 𝑋𝑖, 𝑋𝑗

𝑛

𝑖,𝑗=1 ≥ 0.

𝑘 𝐺 𝑥, 𝑦 = exp −

1

2𝜎2

||𝑥 − 𝑦||2

𝑘 𝐿 𝑥, 𝑦 = exp −𝛼 |𝑥𝑖 − 𝑦𝑖|

𝑚

𝑖=1

𝑘 𝑃 𝑥, 𝑦 = 𝑥 𝑇 𝑦 + 𝑐 𝑑

(𝑐 ≥ 0, 𝑑 ∈ 𝐍)

(𝛼 > 0)

(𝜎 > 0)

𝑘 𝑋𝑖, 𝑋𝑗 =<Φ 𝑋𝑖 , Φ 𝑋𝑗 >

positive definite: 𝑐 𝑇

𝐺 𝑋 𝑐 > 0.](https://image.slidesharecdn.com/yanxukernelbayesv3-140501103107-phpapp02/85/Kernel-Bayes-Rule-7-320.jpg)